The impact of Artificial intelligence (AI) on society cannot be separated from its impact on education. AI belongs to what MIT terms an “arrival” technology—unlike computers, AI’s presence in schools is not a result of a policy of adoption—like smartphones, students will use AI regardless of whether schools encourage or forbid it. These widely available, powerful AI tools pose both challenges as well as opportunities in education.

Educators are divided in their opinions. In a survey by The AI Education Project involving 1,054 K-12 educators, slightly more respondents (52%) said they feel positive rather than negative (48%) about AI in general.

AI optimists believe AI will support the development of student creativity and higher-order thinking. They argue a deliberate balance of new and traditional classroom practices will free teachers’ time for more personal interactions.

AI pessimists see a future where students will use AI to bypass cognition or cheat while teachers waste time and energy on surveillance. For example, English teachers who rely on writing assignments may face challenges with ChatGPT-like tools, which excel at generating essays. Pessimists also imagine a scenario where AI is overadopted, particularly in resource-constrained schools, reducing the teacher workforce and widening divides.

Reflecting these opinions, schools’ approaches to AI have ranged from outright banning or inaction to informal and sporadic experimentation to sanctioned experimentation.

The hard truth, however, is that AI is unavoidable whether we are excited or not. Our students may not choose a career in AI, but no matter what they choose, they’ll likely work with AI. As teachers, if we intend to prepare students for success, we must prepare them to thrive in a world where AI is everywhere.

A Brief Introduction To Types Of AI

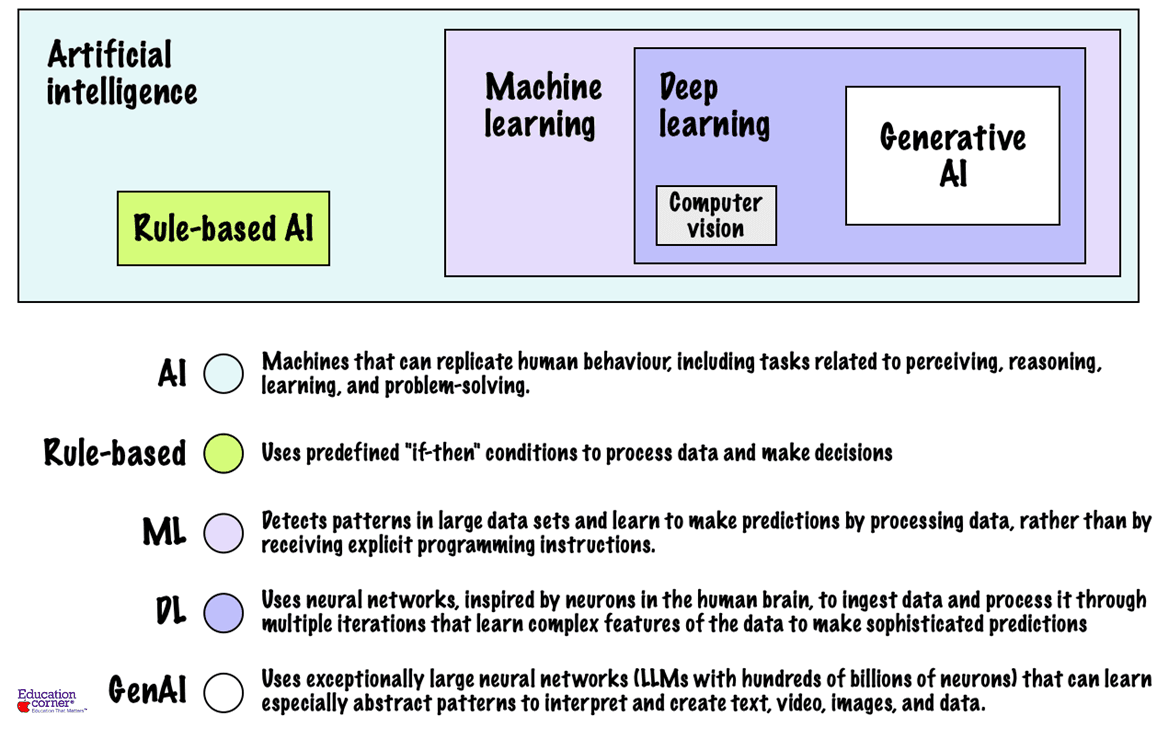

AI is a loose umbrella term that refers to a collection of methods, capabilities, and

limitations—many of which are often not explicitly articulated by researchers and AI developers. It can be defined broadly as machines or software systems that mimic human intelligence to recognize patterns, solve problems, or make decisions.

“AI can be defined broadly as machines or software systems that mimic human intelligence to recognize patterns, solve problems, or make decisions.”

All AI models essentially function as prediction machines—they learn from vast amounts of data and use that training to make predictions. Methods and techniques used to analyze data and generate predictions can vary, leading to different levels and types of AI:

In education, we are particularly interested in Generative AI, but let’s briefly overview some of the AI models and terminologies.

Rule-Based AI

This simplest form of AI uses predefined “if-then” conditions to process data and make decisions. While it may appear to mimic human decision-making, it relies on explicitly programmed instructions, making it a reliable and predictable system for use in critical applications, including healthcare.

Rule-based AIs are simple and cost-efficient; their inner workings are known, and their outputs can be explained. However, they are inherently static and inflexible and struggle with ambiguity when predefined rules do not offer clear guidance.

They also require extensive inputs and manual coding of conditions. For instance, Google Translate used statistical rule-based AI until 2017, which required 500,000 lines of code.

The traditional automated short-answer grading systems are a good example of rule-based AI in education.

Machine Learning (ML)

Machine learning is a more advanced AI system that learns from data. Instead of following hard-coded rules, ML systems analyze data and find patterns. Once the patterns are learned, they can make predictions or decisions without being explicitly programmed.

For example, Netflix’s recommendation system uses machine learning to suggest shows based on what you’ve watched in the past while learning from your viewing habits.

Deep Learning

Deep learning is a subset of ML but more powerful because it uses structures called “neural networks” that mimic how the human brain works. Deep learning systems are excellent at processing large amounts of unstructured data, like text, images, or voice.

Here is a video that explains the surprisingly complicated workings of a neural network in an easy-to-understand manner.

When Google Translate switched to neural-network-based translation, the number of lines in the code shrunk from 500,000 to just 500! Virtual assistants like Siri, Alexa, and Google Assistant use neural-network-based natural language processing (NLPs) to understand and respond to voice commands.

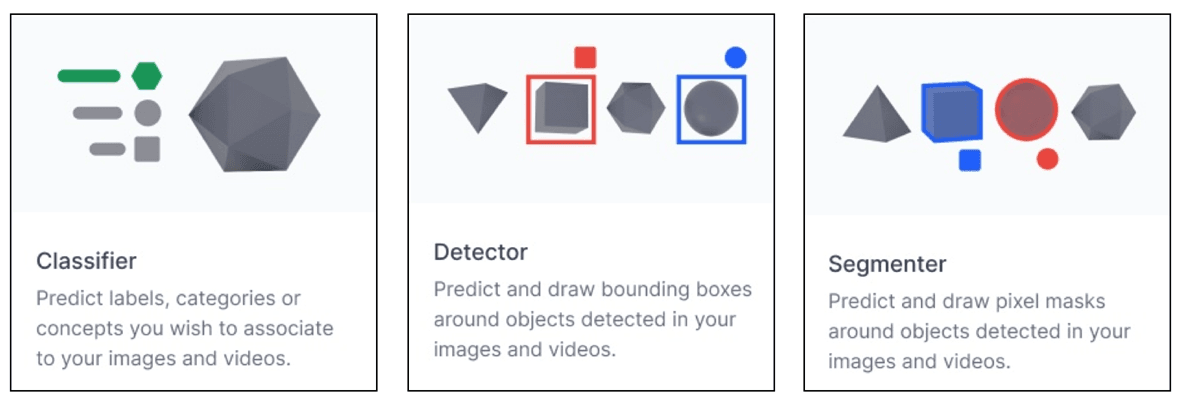

Computer Vision

Computer vision focuses on enabling machines to “see” and interpret visual data like images or videos. The most common ones in this area are image classification, object detection, and segmentation models.

Image classification involves assigning a label or category to an image or video. It uses machine learning to train the AI model on a dataset of images labeled with their respective categories and develop the ability to predict the category or label for new, unseen images.

Object detection distinguishes between instances of objects in an image or video, using bounding boxes to indicate their location within the pixel space. They are helpful when identifying particular objects in a scene, such as cars parked on the street.

Segmentation models identify each pixel in an image, including object boundaries, and are more precise than object detection methods. Models like Meta’s SAM2 can even track objects across videos.

Generative AI

Generative Artificial Intelligence (GenAI) models are the most prominent and widely known type of AI. They were first popularized by ChatGPT, which took just five days to reach one million users when released in November 2022.

GenAI models leverage extensive training data, advanced neural networks, and deep learning architectures, such as transformers and diffusion models, to generate human-like outputs.

While initial versions were unimodal, meaning they could accept text inputs, most models today are multimodal and can accept images, audio, and video as inputs. These models can generate images, translate text into visuals, synthesize speech and audio, or produce synthetic data.

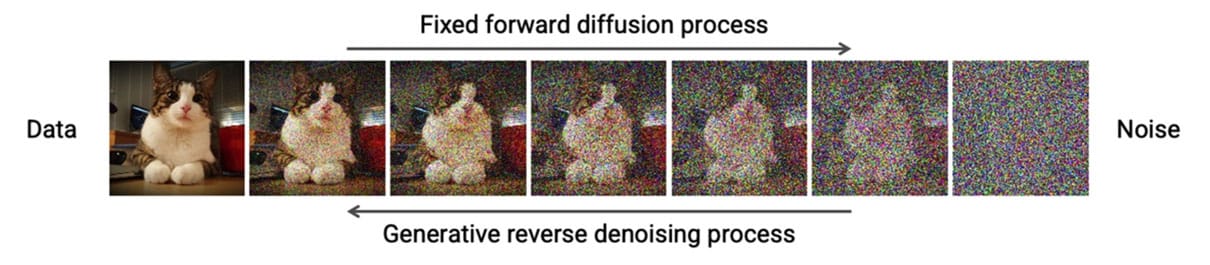

Diffusion Models

Diffusion models are a type of GenAI used to generate images or videos. They consist of a forward process (diffusion process), in which an image is progressively noised, and a reverse process, in which the noise is transformed back into a sample from the target distribution. By going back and forth over large amounts of training data, the model “learns” to reverse the diffusion process to generate new data (images).

Think of it as starting with static on a TV screen and slowly transforming that static into a picture of a cat. Models like DALL-E, Stable Diffusion, and Midjourney belong to this class. Diffusion models can be used for several educational applications, such as:

- Creating custom illustrations to use in lessons.

- Visualizing historical events and scientific concepts.

- Enhancing creative writing assignments.

- Rapid development of educational games and activities.

Large Language Models

Large language models (LLMs) are predictive text generators trained on immense amounts of data. They can understand and generate natural language and other types of content to perform a wide range of tasks.

Here is another video that visually explains what goes on within an LLM like the ChatGPT:

While these models cannot reason or produce original knowledge, they can confidently and convincingly combine information from different sources to give a semblance of understanding and reasoning.

LLMs have the maximum potential to impact education. Teachers can use LLMs to create material that supports student learning, provide multiple examples and explanations, uncover and address student misconceptions, and help assess learning.

As we will see, they can act as a “force multiplier” if implemented cautiously and thoughtfully in aiding evidence-based teaching practices.

Impact On Education

Educators worldwide are still learning how AI will integrate into the education sector, even as AI tools and their applications continue to evolve. While the full impact remains uncertain, two pressing questions for K–12 educators are: To what extent will generative AI transform teaching, and will it enhance student learning?

A closer look at the following questions helps shed light on some of these concerns:

Will the use of AI in education grow?

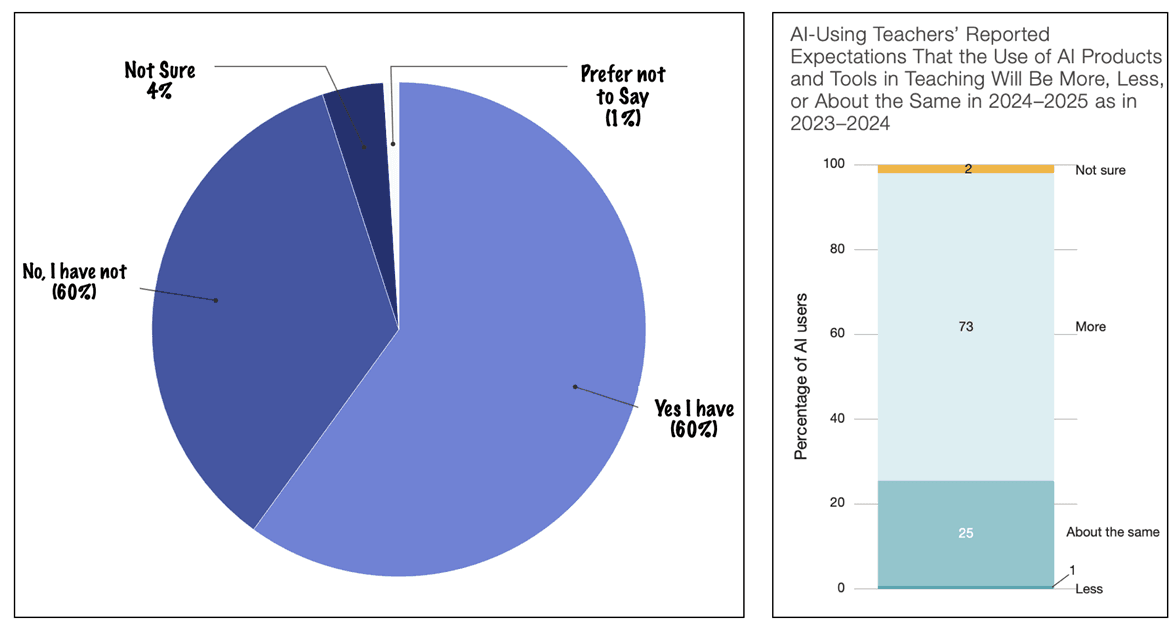

AI tools have found an early foothold in schools. According to a RAND report, 18% of teachers used AI tools as of fall 2023. As of mid-2024, a Forbes survey reports, the share has risen to 60%. More importantly, about three-quarters (73%) of AI-using teachers expect to use more AI in the future.

Organizations like the U.S. Department of Education (ED) and UNESCO have recognized AI’s growing presence in education. For example, ED has developed guidelines for designing AI in education that seek to seize the AI’s educational benefits while addressing risks and ethical considerations. It recommends prioritizing educators’ perspectives in developing AI solutions that enhance and support teachers’ traditional roles rather than attempting to replace them.

UNESCO has advocated equity-focused AI in education policies to narrow technological gaps within communities and worldwide.

Leading AI companies have also taken note of the education space’s unique needs and concerns regarding the responsible use of AI and have begun to adapt their products. For example, OpenAI has introduced ChatGPT Edu, a version of ChatGPT designed for higher education. The company has also hired a formerCoursera Inc. executive to lead its efforts to bring its products to more schools and K-12 classrooms.

While the use of AI will grow in education, the growth will be iterative. As teachers experiment and learn how to effectively deploy, balance, and limit the use of AI, the technology will change. New generations of more powerful AI may appear on much shorter time frames, such that educators might struggle to adapt to one when another appears.

How will AI affect Teacher Roles?

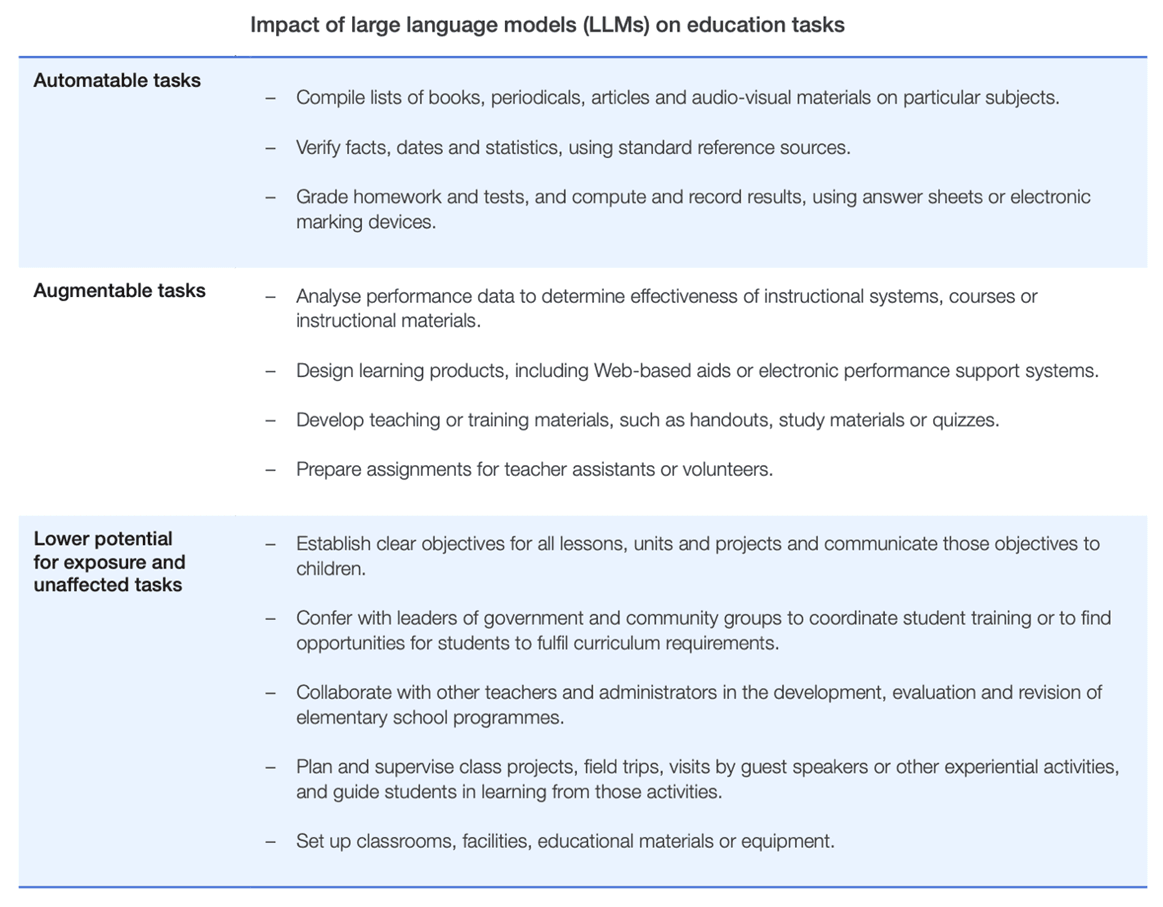

Research by the World Economic Forum, produced in collaboration with Accenture, finds that 40% of all time spent on tasks could potentially be impacted by LLMs. This applies to teaching as well. While this could potentially automate some teaching tasks, others stand to be augmented or enhanced by LLMs:

David Edwards, General Secretary of Education International, a global teachers’ union, has a global view of current teaching trends. Education International comprises 383 member organizations in 178 countries and territories, representing over 32 million teachers and education support personnel.

Edwards expects a “bit of a struggle” regarding the ethical use of AI but is not worried about it replacing the world’s teachers. While AI is set to impact knowledge workers, Edwards argues that teachers are more than just knowledge workers. In a way, they can be called “wisdom workers” because it’s one thing to have knowledge; it’s another thing to know how to apply it ethically and morally for the benefit of many.

Edwards’s reasoning becomes clearer when we flip the question and ask, “What is AI not good at?” or, in other words, “What are humans good at?” Two things that are harder for machines to do are exhibit creativity and social skills. Because teaching relies heavily on social insight, it requires a level of emotional intelligence and perceptiveness that AI simply cannot replicate.

For example, a qualified teacher understands how to read a classroom, respond to individual students’ needs, go away after a lesson, and reflect on what worked and what didn’t. AI cannot do this.

While AI will likely reshape the way education is delivered, the human touch teachers provide will remain indispensable, emphasizing the irreplaceable value of empathy and understanding in nurturing well-rounded, emotionally secure individuals. As educators, our role will shift towards nurturing skills such as critical thinking, creativity, and emotional intelligence, which will be paramount in a future where the ability to perform tasks that machines cannot do is essential.

Will AI bridge or widen the education gap?

The primary goal of education is to build cognitive, social, and emotional intelligence in humans. AI, at a basic level, is manufactured intelligence. In the future, a person’s potential will be dictated by their biological intelligence PLUS the artificial intelligence accessible to them.

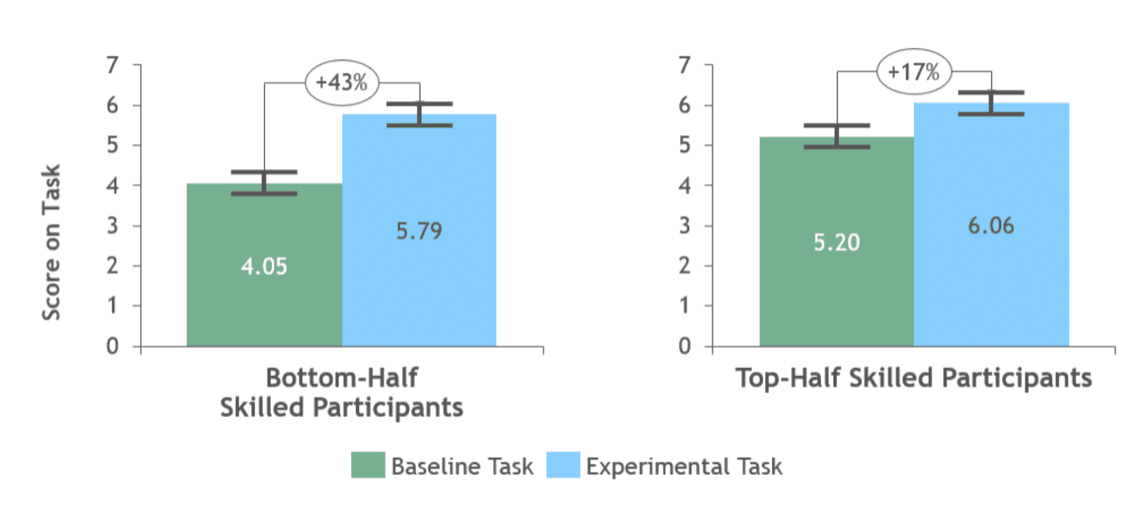

While it is too early to tell how AI might impact learning gaps in education, we can glean some insights from experiments in the private sector. In one study, AI use improved the efficiency of customer support agents by 14%, while in another, consultants could complete tasks 25% faster. In both cases, the lowest-performing workers improved the most.

These observations suggest AI’s impact isn’t evenly distributed. Significant gains are observed in those struggling the most. Should this effect extend to education, children from weaker backgrounds or those with learning challenges could benefit more from access to AI-assisted learning.

Conversely, if schools restrict AI and the only place children can access AI is outside the school, then students from well-to-do sections would benefit the most, as marginalized communities often lack the infrastructure, resources, and digital literacy necessary to fully benefit from these advancements.

Fortunately, economies of scale are in the educator’s favor. While creating AI models is expensive, the marginal cost of deploying them is small. This opens opportunities to weave AI into existing curricula. For instance, AI companions can ask questions during activities like reading can improve children’s comprehension and vocabulary.

Depending on how it is deployed, AI could reduce or exacerbate disparities. Education leaders must carefully steer AI so that it opens promising avenues for reducing inequities in education. To achieve this, their role will shift towards driving AI adoption that transforms teaching and learning to benefit low-income and historically disadvantaged students.

Will education become more personalized with AI?

While teachers will remain the cornerstone of effective learning, AI has the potential to hyperpersonalize lessons for each student, adjust the format, degree of difficulty, and pace of instruction based on the individual, and offer on-demand tutoring and feedback. Here are some ways AI can bring more personalization to learning:

- Simulating students: LLMs can serve as practice students for new teachers. They are increasingly effective and capable of demonstrating confusion and asking adaptive follow-up questions.

- Real-time feedback and suggestions for teachers: AI can provide real-time feedback and suggestions (e.g., questions to ask the class), creating a bank of live advice based on expert pedagogy. It can even produce post-lesson reports summarizing classroom dynamics, such as student speaking time or identifying questions that triggered the most engagement.

- Enabling learning without fear of judgment: fear of peer judgment holds many students back from fully engaging in many contexts. AI has the potential to increase learning without affecting self-confidence.

- Powering adaptive learning platforms: these platforms can use AI to dynamically modify the complexity and pace of learning and improve student outcomes by ensuring each student receives instruction tailored to their needs.

- Intelligent tutoring systems (ITS): these refer to AI programs that know what they teach, who they teach, and how to teach it. Research by the U.S. Department of Education indicates that intelligent tutoring systems can raise student achievement levels to the same level as one-on-one tutoring.

- Predictive analytics for student success: Micro-analytics, which involves analyzing granular data, helps gain insights into student learning patterns, strengths, and weaknesses. AI helps make data analysis more efficient and precise, providing teachers with near-real-time feedback that can be used for iterative improvement rather than sporadic, large-scale innovation.

- Supporting differentiated Instruction: With students at different levels of ability, motivation, and learning styles, it can be difficult for teachers to provide each student with individualized attention. While human capacity is limited by the number of hours, AI tools, in just a few seconds, can generate a range of lesson plans and learning materials. Here is a more blog on how to use AI to generate differentiated instruction.

These are only some examples of AI in personalized learning, which provides a glimpse into the technology’s potential to transform K-12 education by meeting the unique needs of both educators and learners.

Will AI cut down teachers’ time on unproductive tasks?

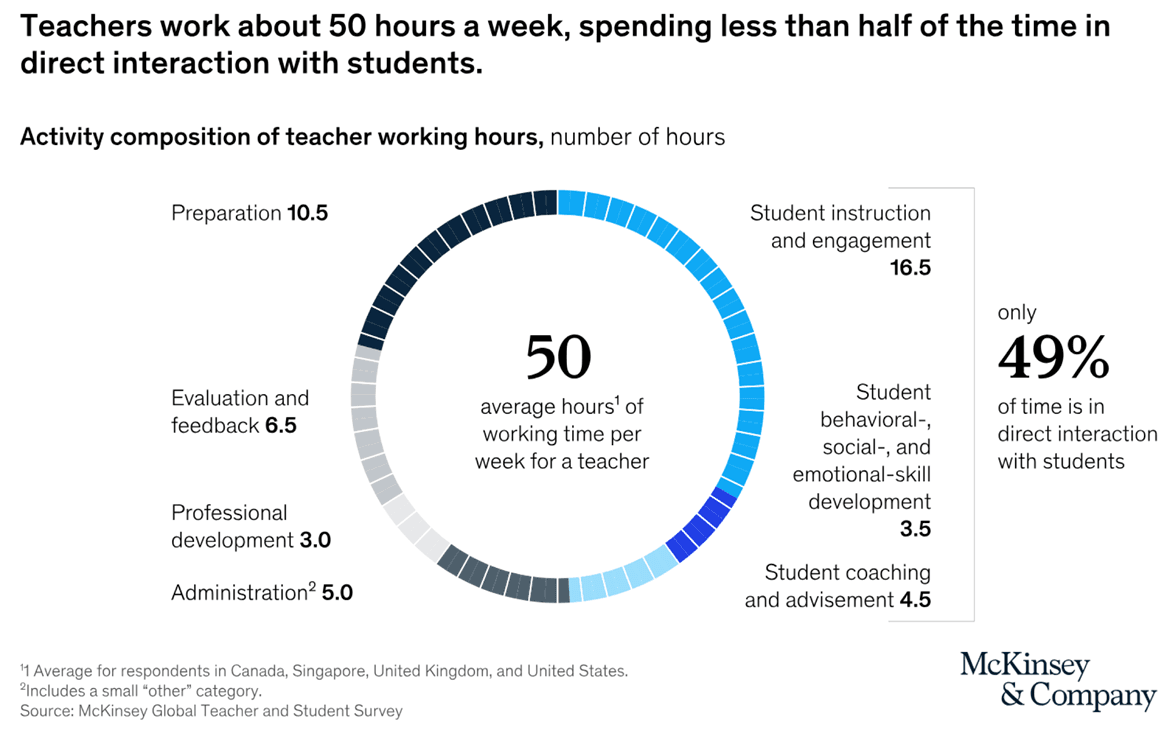

A McKinsey survey shows that K–12 teachers spend an average of 50 hours a week, yet less than half that time is spent directly interacting with students. Over the past 25 years, teachers’ hours have steadily increased due to the intrusion of “non-core” tasks like administration, curricular reform, and compliance with accountability frameworks. Technology has only exacerbated the problem due to a perceived need to be more available.

In one study, consultant, author, and speaker Leon Furze, who has held multiple roles at school and board leadership, discusses the impact of AI on teacher workload and its potential to save time. Furze uses the classic Eisenhower matrix to divide the time teachers spend into four quadrants:

- Urgent & important

- Important and not urgent,

- Urgent and not important, and

- Not urgent and not important.

This approach helps gain a better understanding of AI’s use in cutting down teacher workload:

Quadrant 1: Urgent & Important

These are tasks that are of high value and need to be completed immediately. Examples include emergencies the teacher must handle, priorities that absolutely cannot be delegated or pushed aside, and high-value interactions with a near deadline.

While AI can be helpful in this quadrant, it can primarily assist with urgent tasks rather than reduce workload.

For example, some classroom conversations can go down rabbit holes that are interesting for students but outside of the teacher’s areas of expertise or not appropriate for the lesson. Rather than brushing them off, AI can be used both to explore ideas and to bring the lesson back on track.

AI can also be used to quickly generate lesson plans using prompts that provide detailed enough context to meet the needs of a particular lesson quickly.

Quadrant 2: Important & Not Urgent

These are tasks of very high value that do not have the pressing urgency of quadrant one activities. Examples include long-term plans and strategies, activities that will only be rewarded in the future, and tasks that are beneficial to sustaining an organization or an initiative but which require significant planning and scheduling of time.

This is the quadrant where significant workload gains from GenAI are possible.

Quadrant 2 activities center on projects that require teachers to create space, dedicate time, and create opportunities to focus on their roles and future goals deliberately. Teachers spend an average of 11 hours a week on preparation activities. Effective use of AI could reduce this time to just six hours.

For example, GenAI can easily automate tasks like reformatting documents, taking notes, and converting them into proformas or templates. It can help teachers in their strategic planning, too. A prompt such as the one shown below can help plan and mitigate risks of behavior incidents by identifying potential problems:

Here’s a unit of work. Identify places where students might become disengaged, where roadblocks could occur, or where the volume of work might cause problems for students completing tasks. <copy/paste or upload unit of work>

GenAI can also help make sense of data, identify patterns and trends, and use insights to inform instructional decisions and school-wide initiatives.

- I have a spreadsheet of my student’s scores on the last three formative assessments in my <subject> class. Identify any patterns or trends in the data and suggest two or three potential interventions or instructional strategies I could use to support students who are struggling with specific concepts.

Quadrant 3: Urgent & Not Important

These tasks are often other people’s problems that have either been delegated to the teacher or descended from an external organization, such as compliance or accreditation. Deadlines have to be met, but the activity is not necessarily important for day-to-day work.

GenAI can be used, for example, to understand an email from a parent, particularly ones that are longer, complex, or fraught with emotions, producing draft responses that don’t require much mental energy. Such AI features are built into most platforms and don’t require additional investments.

Quadrant 4: Not Urgent And Not Important

These are generally time-wasting activities (for example, endlessly scrolling social media) where AI isn’t the answer. Quadrant 4 is not a good place to spend your time and must be avoided.

What ethical concerns does AI present?

Bias and Fairness

AI models can have inherent biases that teachers and students should be aware of. AI models, such as LLMs, use statistical algorithms to analyze vast amounts of data to determine patterns and make textual connections. This process is known as training. Because a trained model mimics the data it was trained on, human and systemic biases in data impact the output.

AI models can also recreate the more subtle but pervasive social patterns, like biases and stereotypes. Since these patterns reflect the (often unequal) status quo of the world, these can be hard to measure and track down, but their effect over time, as more and more people use them, can worsen existing issues.

Social issues prominent in society today, such as gender inequality, are often reproduced by these language models, and sometimes, models even produce text that is more biased than reality. For example, imagine using the model to create stories, and those stories only show women in certain roles, like always being at home or talking about them, mainly in terms of how they look.

Data Privacy

Many LLMs explicitly use conversations with their users as training data to improve future versions. While companies attempt to detect and scrub such sensitive information, their automatic methods are far from perfect. Even if the LLMs don’t leak your personal information, there is always a risk of a data breach, which would expose the information.

In general, it is best to think of AI chatbots as potentially public data warehouses rather than personal private tools. Below is a list of many types of student data that should not be shared with an AI tool:

- Personally Identifiable Information (PII) – Names, addresses, phone numbers, email addresses, social security numbers, student identification numbers, birth dates.

- Educational Records – Grades, transcripts, class schedules, disciplinary records, disabilities, and Individual Education Plans (IEPs).

- Health Information – Medical records, health conditions, allergies, medication information, therapy records.

- Financial Information – Family income, financial aid information, bank account details.

- Behavioral or Disciplinary Records – Disciplinary actions, behavior reports, counseling records.

- Photos or Videos – Images or recordings of students without explicit consent.

- Communication Logs – Personal messages, emails, and communication with parents or guardians.

One approach to protecting student data privacy when using an AI chatbot is anonymizing and generalizing the data. This means removing PIIs and using hypothetical scenarios that mirror students’ needs without revealing their identity or specific, identifiable details. Here is a detailed guide by The Future of Privacy Forum on how to vet generative AI tools for use in schools.

Transparency

Applications built for educational use were traditionally based on simple, traceable algorithms with interpretable instructions. With advanced AI models, this is no longer the case. Their internal workings are invisible to the user: you can feed them input and get output, but you cannot examine the system’s code or the logic that produced the output.

Machine learning professionals refer to this as the Black Box Problem. Researchers don’t fully understand how machine-learning algorithms, particularly deep-learning algorithms, operate.

When used in critical areas like education and healthcare, this raises questions about trust. These models can write essays, solve equations, and create educational content with little transparency on how these answers are derived.

Students trust their educators to impart knowledge based on reasoned and proven pedagogical methods. Similarly, educators trust students to engage with the material authentically. Generative AI, in its current “black box” state, disrupts this mutual trust.

Plagiarism

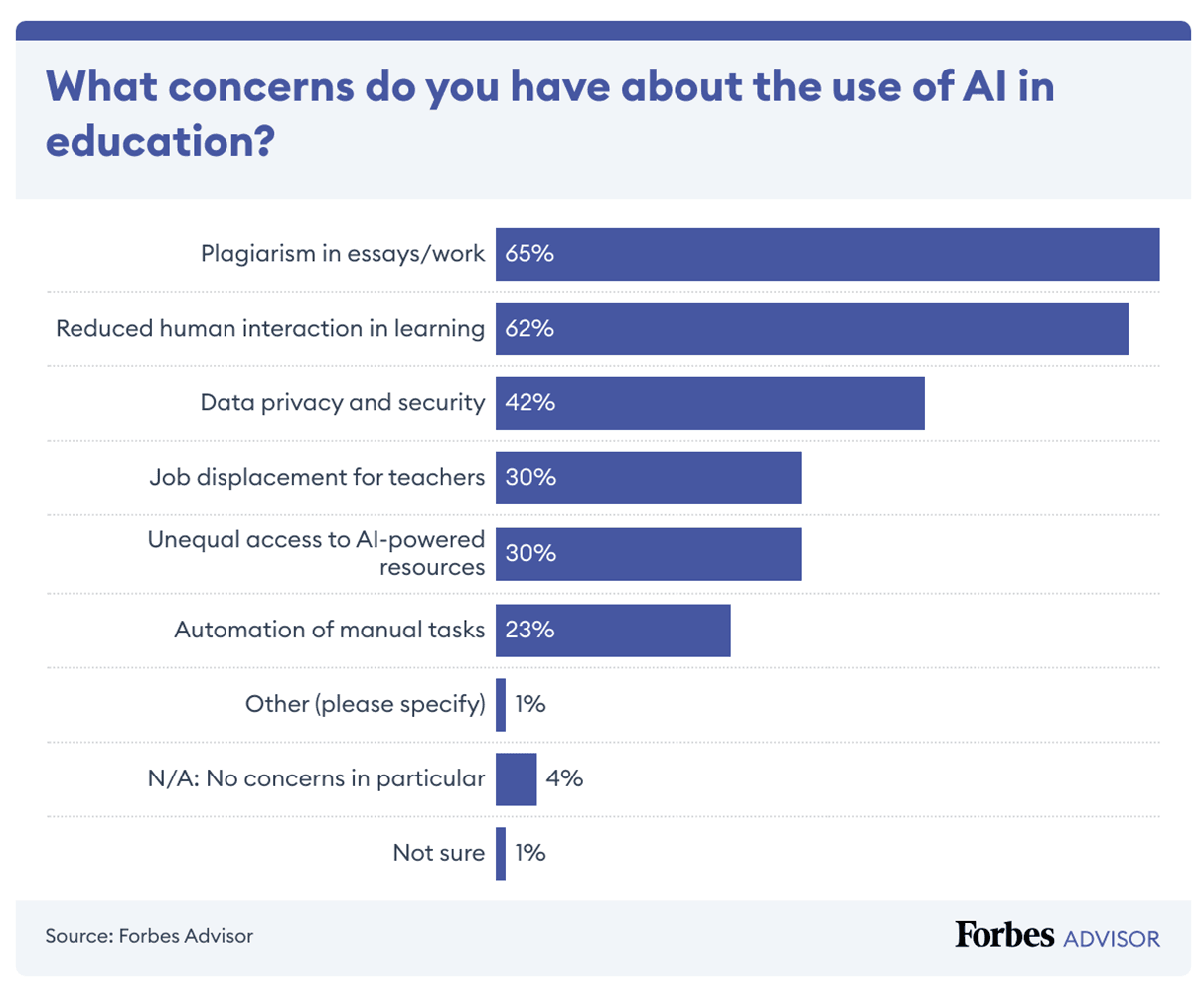

Of all the ethical concerns surrounding AI, educators are most worried about cheating and plagiarism.

The problem is not new; academic integrity has always been an issue in classrooms. However, AI has added a whole new layer to it. Today, widely accessible tools like ChatGPT can pass rigorous examinations – from the bar to MBA programs to medical licensing.

Compounding this issue, an increasing number of studies are questioning the effectiveness of AI detection tools. In 2023, OpenAI halted its initiative to differentiate human-written text from AI-generated text, citing a low accuracy rate. Computer scientists at the University of Maryland, too, concluded that AI-generated content cannot be accurately detected – at least not yet.

While there is no fool-proof solution to this issue, here are some steps teachers can take to mitigate the impact of AI-assisted plagiarism:

- Test the AI platforms to understand their limitations.

- Get to know students’ writing as much as possible.

- Do some writing in class, staying mindful of students’ limitations.

- Use formative assessment to get snapshots of progress over time.

- Evaluate your prompts and try to include a student-centered approach that features emotional intelligence and experience.

- Test AI tools together and discuss their limitations.

- Include a “trojan horse” word or phrase in your assignment that isn’t visible to the student—but you can use this keyword later to see if the student pasted the prompt into an AI tool.

- As a last resort, use AI-detection tools, but be aware that they aren’t perfect and flag plagiarism where it doesn’t exist almost half of the time. They also tend to be biased against non-native English speakers.

Here’s a Vox interview of students and teachers discussing how schools can address plagiarism in the age of chatbots:

The Future of AI in Education

AI will undoubtedly join a wide range of technologies that will change how learners learn. It cannot replace teachers—they will play a central role whenever AI is applied to notice patterns and automate educational processes.

An AI-enhanced future will be more like an electric bike and less like robot vacuums where humans will be fully aware and fully in control, the burden will be less, and the effort will be multiplied by a complementary technological enhancement.

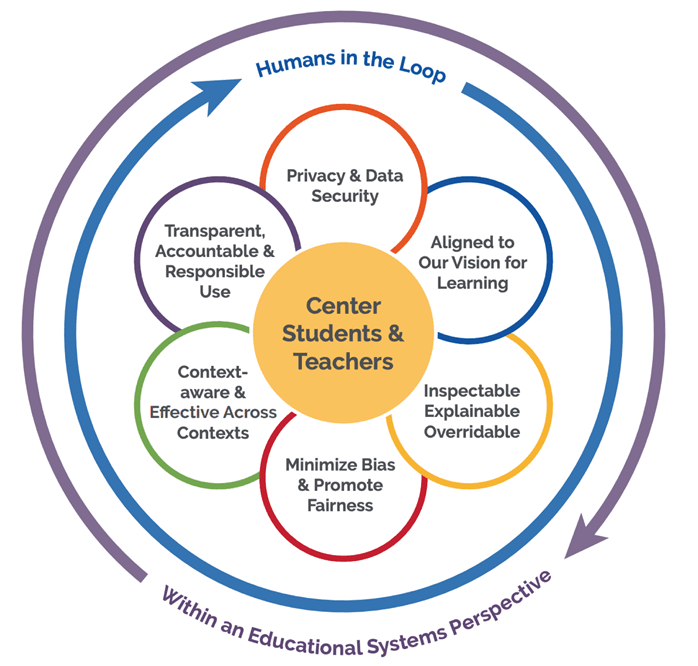

Achieving this will require a “humans-in-the-loop” approach, where the quality of educational technology is determined not only by outcomes but also by the degree to which the models at the heart of the AI tools and systems align with a shared vision for teaching and learning.

Teachers’ roles will be crucial in prioritizing ethical considerations and preserving education as a social and human-centered endeavor. As access to “manufactured intelligence” moves education goals away from rote memorization and towards the ability to apply knowledge, skills like critical thinking, creativity, and emotional intelligence, only learned through human interaction, will become invaluable.

Education’s fundamental goal is helping young people gain knowledge, awareness, and behaviors that allow them to live in harmony with each other, with the planet, and with technology. This can only be achieved using balanced approaches where AI supports educational processes while keeping humans in the driver’s seat.